In statistics, regression analysis is a technique we use to understand the relationship between a predictor variable, x, and a response variable, y.

When we conduct regression analysis, we end up with a model that tells us the predicted value for the response variable based on the value of the predictor variable.

One way to assess how “good” our model fits a given dataset is to calculate the root mean square error, which is a metric that tells us how far apart our predicted values are from our observed values, on average.

The formula to find the root mean square error, more commonly referred to as RMSE, is as follows:

RMSE = √[ Σ(Pi – Oi)2 / n ]

where:

- Σ is a fancy symbol that means “sum”

- Pi is the predicted value for the ith observation in the dataset

- Oi is the observed value for the ith observation in the dataset

- n is the sample size

Technical Notes:

- The root mean square error can be calculated for any type of model that produces predicted values, which can then be compared to the observed values of a dataset.

- The root mean square error is also sometimes called the root mean square deviation, which is often abbreviated as RMSD.

Next, let’s look at an example of how to calculate root mean square error in Excel.

How to Calculate Root Mean Square Error in Excel

There is no built-in function to calculate RMSE in Excel, but we can calculate it fairly easily with a single formula. We’ll show how to calculate RMSE for two different scenarios.

Scenario 1

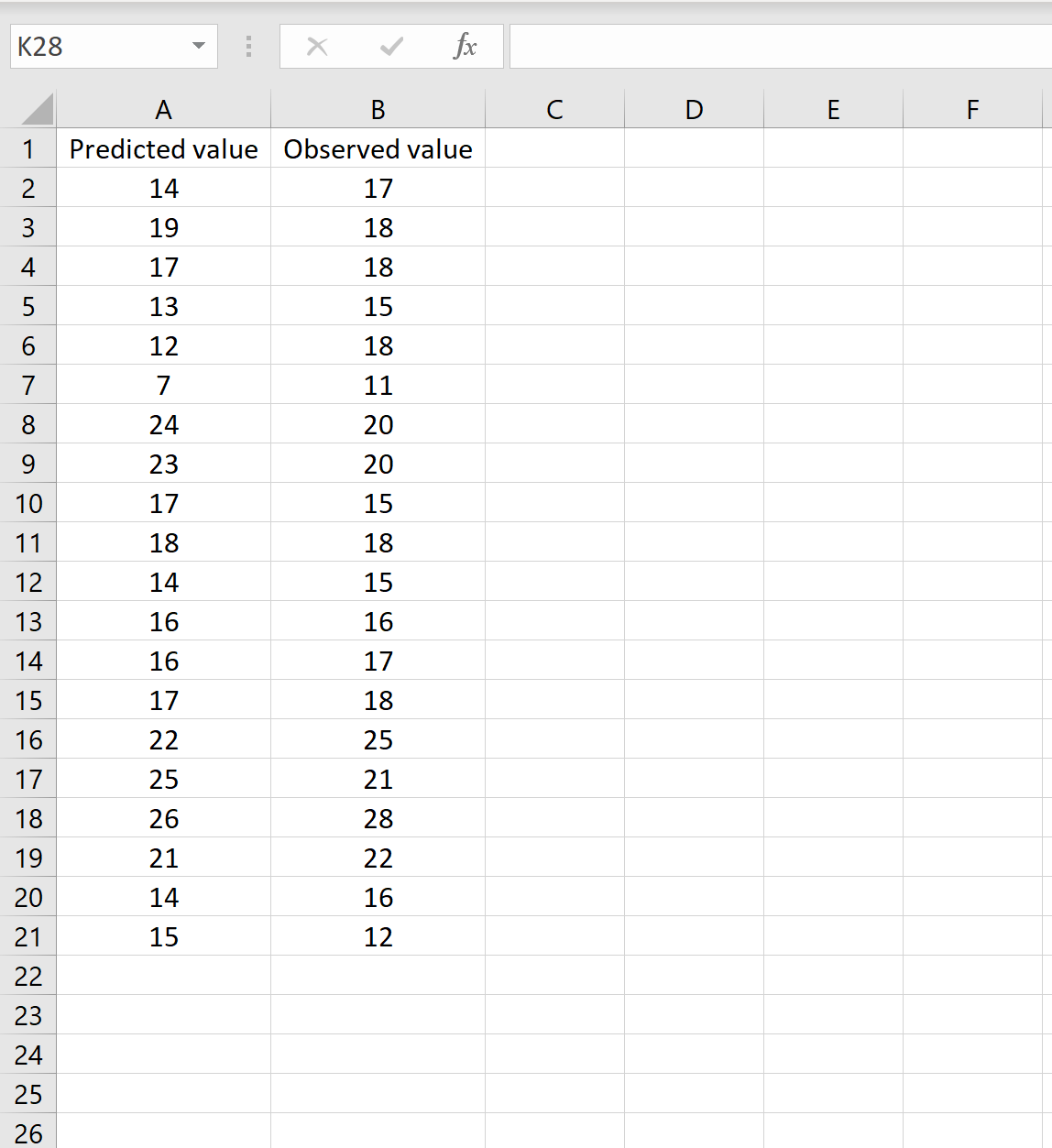

In one scenario, you might have one column that contains the predicted values of your model and another column that contains the observed values. The image below shows an example of this scenario:

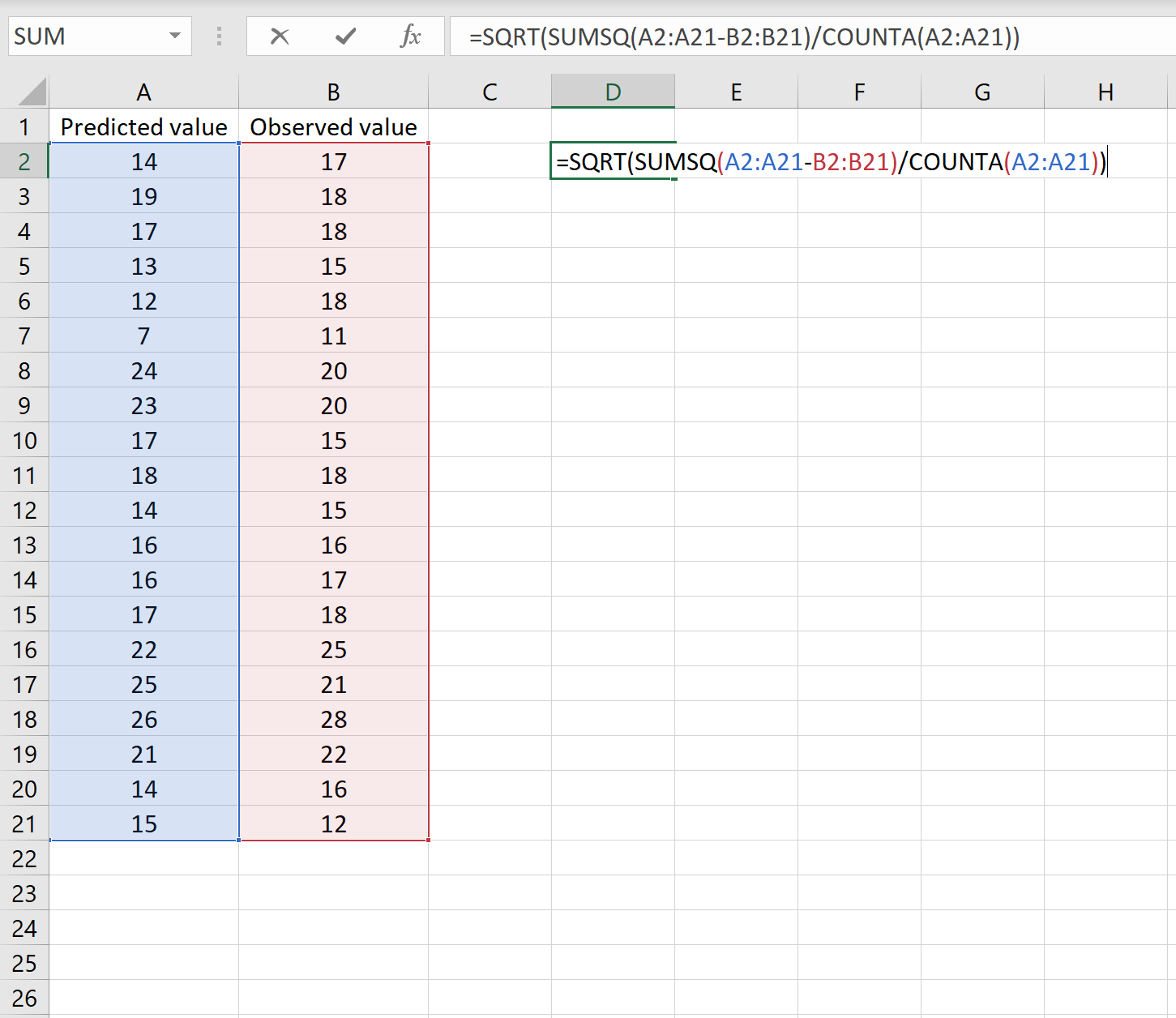

If this is the case, then you can calculate the RMSE by typing the following formula into any cell, and then clicking CTRL+SHIFT+ENTER:

=SQRT(SUMSQ(A2:A21-B2:B21) / COUNTA(A2:A21))

This tells us that the root mean square error is 2.6646.

The formula might look a bit tricky, but it makes sense once you break it down:

=SQRT(SUMSQ(A2:A21-B2:B21) / COUNTA(A2:A21))

- First, we calculate the sum of the squared differences between the predicted and observed values using the SUMSQ() function.

- Next, we divide by the sample size of the dataset using COUNTA(), which counts the number of cells in a range that are not empty.

- Lastly, we take the square root of the whole calculation using the SQRT() function.

Scenario 2

In another scenario, you may have already calculated the differences between the predicted and observed values. In this case, you will only have one column that displays the differences.

The image below shows an example of this scenario. The predicted values are displayed in column A, the observed values in column B, and the difference between the predicted and observed values in column D:

If this is the case, then you can calculate the RMSE by typing the following formula into any cell, and then clicking CTRL+SHIFT+ENTER:

=SQRT(SUMSQ(D2:D21) / COUNTA(D2:D21))

This tells us that the root mean square error is 2.6646, which matches the result that we got in the first scenario. This confirms that these two approaches to calculating RMSE are equivalent.

The formula we used in this scenario is only slightly different than the one we used in the previous scenario:

=SQRT(SUMSQ(D2:D21) / COUNTA(D2:D21))

- Since we already calculated the differences between the predicted and observed values in column D, we can calculate the sum of the squared differences by using the SUMSQ() function with just the values in column D.

- Next, we divide by the sample size of the dataset using COUNTA(), which counts the number of cells in a range that are not empty.

- Lastly, we take the square root of the whole calculation using the SQRT() function.

How to Interpret RMSE

As mentioned earlier, RMSE is a useful way to see how well a regression model (or any model that produces predicted values) is able to “fit” a dataset.

The larger the RMSE, the larger the difference between the predicted and observed values, which means the worse the regression model fits the data. Conversely, the smaller the RMSE, the better a model is able to fit the data.

It can be particularly useful to compare the RMSE of two different models with each other to see which model fits the data better.

For more tutorials in Excel, be sure to check out our Excel Guides Page, which lists every Excel tutorial on Statology.